mirror of

https://github.com/XRPLF/clio.git

synced 2025-11-16 17:55:50 +00:00

Compare commits

24 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

dfe18ed682 | ||

|

|

92a072d7a8 | ||

|

|

24fca61b56 | ||

|

|

ae8303fdc8 | ||

|

|

709a8463b8 | ||

|

|

84d31986d1 | ||

|

|

d50f229631 | ||

|

|

379c89fb02 | ||

|

|

81f7171368 | ||

|

|

629b35d1dd | ||

|

|

6fc4cee195 | ||

|

|

b01813ac3d | ||

|

|

6bf8c5bc4e | ||

|

|

2ffd98f895 | ||

|

|

3edead32ba | ||

|

|

28980734ae | ||

|

|

ce60c8f64d | ||

|

|

39ef2ae33c | ||

|

|

d83975e750 | ||

|

|

4468302852 | ||

|

|

a704cf7cfe | ||

|

|

05d09cc352 | ||

|

|

ae96ac7baf | ||

|

|

4579fa2f26 |

61

.github/workflows/build.yml

vendored

61

.github/workflows/build.yml

vendored

@@ -1,9 +1,9 @@

|

||||

name: Build Clio

|

||||

on:

|

||||

push:

|

||||

branches: [master, develop, develop-next]

|

||||

branches: [master, release, develop, develop-next]

|

||||

pull_request:

|

||||

branches: [master, develop, develop-next]

|

||||

branches: [master, release, develop, develop-next]

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

@@ -18,9 +18,8 @@ jobs:

|

||||

uses: XRPLF/clio-gha/lint@main

|

||||

|

||||

build_clio:

|

||||

name: Build

|

||||

name: Build Clio

|

||||

runs-on: [self-hosted, Linux]

|

||||

needs: lint

|

||||

steps:

|

||||

|

||||

- name: Clone Clio repo

|

||||

@@ -34,26 +33,62 @@ jobs:

|

||||

path: clio_ci

|

||||

repository: 'XRPLF/clio-ci'

|

||||

|

||||

- name: Clone GitHub actions repo

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

repository: XRPLF/clio-gha

|

||||

path: gha # must be the same as defined in XRPLF/clio-gha

|

||||

|

||||

- name: Build

|

||||

uses: XRPLF/clio-gha/build@main

|

||||

|

||||

- name: Artifact packages

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: clio_packages

|

||||

path: ${{ github.workspace }}/*.deb

|

||||

|

||||

- name: Artifact clio_tests

|

||||

uses: actions/upload-artifact@v2

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: clio_tests

|

||||

path: clio_tests

|

||||

|

||||

sign:

|

||||

name: Sign packages

|

||||

needs: build_clio

|

||||

runs-on: ubuntu-20.04

|

||||

if: github.ref == 'refs/heads/master' || github.ref == 'refs/heads/release' || github.ref == 'refs/heads/develop'

|

||||

env:

|

||||

GPG_KEY_B64: ${{ secrets.GPG_KEY_B64 }}

|

||||

GPG_KEY_PASS_B64: ${{ secrets.GPG_KEY_PASS_B64 }}

|

||||

|

||||

steps:

|

||||

- name: Get package artifact

|

||||

uses: actions/download-artifact@v3

|

||||

with:

|

||||

name: clio_packages

|

||||

|

||||

- name: find packages

|

||||

run: find . -name "*.deb"

|

||||

|

||||

- name: Install dpkg-sig

|

||||

run: |

|

||||

sudo apt-get update && sudo apt-get install -y dpkg-sig

|

||||

|

||||

- name: Sign Debian packages

|

||||

uses: XRPLF/clio-gha/sign@main

|

||||

|

||||

- name: Verify the signature

|

||||

run: |

|

||||

set -e

|

||||

for PKG in $(ls *.deb); do

|

||||

gpg --verify "${PKG}"

|

||||

done

|

||||

|

||||

- name: Get short SHA

|

||||

id: shortsha

|

||||

run: echo "::set-output name=sha8::$(echo ${GITHUB_SHA} | cut -c1-8)"

|

||||

|

||||

- name: Artifact Debian package

|

||||

uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: deb_package-${{ github.sha }}

|

||||

path: clio_ci/build/*.deb

|

||||

name: clio-deb-packages-${{ steps.shortsha.outputs.sha8 }}

|

||||

path: ${{ github.workspace }}/*.deb

|

||||

|

||||

test_clio:

|

||||

name: Test Clio

|

||||

|

||||

24

CMake/deps/Remove-bitset-operator.patch

Normal file

24

CMake/deps/Remove-bitset-operator.patch

Normal file

@@ -0,0 +1,24 @@

|

||||

From 5cd9d09d960fa489a0c4379880cd7615b1c16e55 Mon Sep 17 00:00:00 2001

|

||||

From: CJ Cobb <ccobb@ripple.com>

|

||||

Date: Wed, 10 Aug 2022 12:30:01 -0400

|

||||

Subject: [PATCH] Remove bitset operator !=

|

||||

|

||||

---

|

||||

src/ripple/protocol/Feature.h | 1 -

|

||||

1 file changed, 1 deletion(-)

|

||||

|

||||

diff --git a/src/ripple/protocol/Feature.h b/src/ripple/protocol/Feature.h

|

||||

index b3ecb099b..6424be411 100644

|

||||

--- a/src/ripple/protocol/Feature.h

|

||||

+++ b/src/ripple/protocol/Feature.h

|

||||

@@ -126,7 +126,6 @@ class FeatureBitset : private std::bitset<detail::numFeatures>

|

||||

public:

|

||||

using base::bitset;

|

||||

using base::operator==;

|

||||

- using base::operator!=;

|

||||

|

||||

using base::all;

|

||||

using base::any;

|

||||

--

|

||||

2.32.0

|

||||

|

||||

@@ -1,11 +1,13 @@

|

||||

set(RIPPLED_REPO "https://github.com/ripple/rippled.git")

|

||||

set(RIPPLED_BRANCH "1.9.0")

|

||||

set(RIPPLED_BRANCH "1.9.2")

|

||||

set(NIH_CACHE_ROOT "${CMAKE_CURRENT_BINARY_DIR}" CACHE INTERNAL "")

|

||||

set(patch_command ! grep operator!= src/ripple/protocol/Feature.h || git apply < ${CMAKE_CURRENT_SOURCE_DIR}/CMake/deps/Remove-bitset-operator.patch)

|

||||

message(STATUS "Cloning ${RIPPLED_REPO} branch ${RIPPLED_BRANCH}")

|

||||

FetchContent_Declare(rippled

|

||||

GIT_REPOSITORY "${RIPPLED_REPO}"

|

||||

GIT_TAG "${RIPPLED_BRANCH}"

|

||||

GIT_SHALLOW ON

|

||||

PATCH_COMMAND "${patch_command}"

|

||||

)

|

||||

|

||||

FetchContent_GetProperties(rippled)

|

||||

|

||||

@@ -2,6 +2,10 @@ cmake_minimum_required(VERSION 3.16.3)

|

||||

|

||||

project(clio)

|

||||

|

||||

if(CMAKE_CXX_COMPILER_VERSION VERSION_LESS 11)

|

||||

message(FATAL_ERROR "GCC 11+ required for building clio")

|

||||

endif()

|

||||

|

||||

option(BUILD_TESTS "Build tests" TRUE)

|

||||

|

||||

option(VERBOSE "Verbose build" TRUE)

|

||||

@@ -12,7 +16,7 @@ endif()

|

||||

|

||||

if(NOT GIT_COMMIT_HASH)

|

||||

if(VERBOSE)

|

||||

message(WARNING "GIT_COMMIT_HASH not provided...looking for git")

|

||||

message("GIT_COMMIT_HASH not provided...looking for git")

|

||||

endif()

|

||||

find_package(Git)

|

||||

if(Git_FOUND)

|

||||

@@ -45,12 +49,12 @@ target_sources(clio PRIVATE

|

||||

## Backend

|

||||

src/backend/BackendInterface.cpp

|

||||

src/backend/CassandraBackend.cpp

|

||||

src/backend/LayeredCache.cpp

|

||||

src/backend/Pg.cpp

|

||||

src/backend/PostgresBackend.cpp

|

||||

src/backend/SimpleCache.cpp

|

||||

## ETL

|

||||

src/etl/ETLSource.cpp

|

||||

src/etl/NFTHelpers.cpp

|

||||

src/etl/ReportingETL.cpp

|

||||

## Subscriptions

|

||||

src/subscriptions/SubscriptionManager.cpp

|

||||

@@ -69,6 +73,8 @@ target_sources(clio PRIVATE

|

||||

src/rpc/handlers/AccountObjects.cpp

|

||||

src/rpc/handlers/GatewayBalances.cpp

|

||||

src/rpc/handlers/NoRippleCheck.cpp

|

||||

# NFT

|

||||

src/rpc/handlers/NFTInfo.cpp

|

||||

# Ledger

|

||||

src/rpc/handlers/Ledger.cpp

|

||||

src/rpc/handlers/LedgerData.cpp

|

||||

|

||||

37

README.md

37

README.md

@@ -1,9 +1,3 @@

|

||||

[](https://github.com/legleux/clio/actions/workflows/build.yml)

|

||||

|

||||

|

||||

**Status:** This software is in beta mode. We encourage anyone to try it out and

|

||||

report any issues they discover. Version 1.0 coming soon.

|

||||

|

||||

# Clio

|

||||

Clio is an XRP Ledger API server. Clio is optimized for RPC calls, over WebSocket or JSON-RPC. Validated

|

||||

historical ledger and transaction data are stored in a more space-efficient format,

|

||||

@@ -28,7 +22,7 @@ from which data can be extracted. The rippled node does not need to be running o

|

||||

|

||||

## Building

|

||||

|

||||

Clio is built with CMake. Clio requires c++20, and boost 1.75.0 or later.

|

||||

Clio is built with CMake. Clio requires at least GCC-11 (C++20), and Boost 1.75.0 or later.

|

||||

|

||||

Use these instructions to build a Clio executable from the source. These instructions were tested on Ubuntu 20.04 LTS.

|

||||

|

||||

@@ -146,25 +140,38 @@ which can cause high latencies. A possible alternative to this is to just deploy

|

||||

a database in each region, and the Clio nodes in each region use their region's database.

|

||||

This is effectively two systems.

|

||||

|

||||

## Developing against `rippled` in standalone mode

|

||||

|

||||

If you wish you develop against a `rippled` instance running in standalone

|

||||

mode there are a few quirks of both clio and rippled you need to keep in mind.

|

||||

You must:

|

||||

|

||||

1. Advance the `rippled` ledger to at least ledger 256

|

||||

2. Wait 10 minutes before first starting clio against this standalone node.

|

||||

|

||||

## Logging

|

||||

Clio provides several logging options, all are configurable via the config file and are detailed below.

|

||||

|

||||

`log_level`: The minimum level of severity at which the log message will be outputted.

|

||||

Severity options are `trace`, `debug`, `info`, `warning`, `error`, `fatal`.

|

||||

`log_level`: The minimum level of severity at which the log message will be outputted.

|

||||

Severity options are `trace`, `debug`, `info`, `warning`, `error`, `fatal`.

|

||||

|

||||

`log_to_console`: Enable/disable log output to console. Options are `true`/`false`.

|

||||

`log_to_console`: Enable/disable log output to console. Options are `true`/`false`. Defaults to true.

|

||||

|

||||

`log_to_file`: Enable/disable log saving to files in persistent local storage. Options are `true`/`false`.

|

||||

|

||||

`log_directory`: Path to the directory where log files are stored. If such directory doesn't exist, Clio will create it.

|

||||

`log_directory`: Path to the directory where log files are stored. If such directory doesn't exist, Clio will create it. If not specified, logs are not written to a file.

|

||||

|

||||

`log_rotation_size`: The max size of the log file in **megabytes** before it will rotate into a smaller file.

|

||||

|

||||

`log_directory_max_size`: The max size of the log directory in **megabytes** before old log files will be

|

||||

`log_directory_max_size`: The max size of the log directory in **megabytes** before old log files will be

|

||||

deleted to free up space.

|

||||

|

||||

`log_rotation_hour_interval`: The time interval in **hours** after the last log rotation to automatically

|

||||

rotate the current log file.

|

||||

|

||||

Note, time-based log rotation occurs dependently on size-based log rotation, where if a

|

||||

size-based log rotation occurs, the timer for the time-based rotation will reset.

|

||||

size-based log rotation occurs, the timer for the time-based rotation will reset.

|

||||

|

||||

## Cassandra / Scylla Administration

|

||||

|

||||

Since Clio relies on either Cassandra or Scylla for its database backend, here are some important considerations:

|

||||

|

||||

- Scylla, by default, will reserve all free RAM on a machine for itself. If you are running `rippled` or other services on the same machine, restrict its memory usage using the `--memory` argument: https://docs.scylladb.com/getting-started/scylla-in-a-shared-environment/

|

||||

|

||||

@@ -276,7 +276,8 @@ BackendInterface::fetchLedgerPage(

|

||||

else if (!outOfOrder)

|

||||

{

|

||||

BOOST_LOG_TRIVIAL(error)

|

||||

<< __func__ << " incorrect successor table. key = "

|

||||

<< __func__

|

||||

<< " deleted or non-existent object in successor table. key = "

|

||||

<< ripple::strHex(keys[i]) << " - seq = " << ledgerSequence;

|

||||

std::stringstream msg;

|

||||

for (size_t j = 0; j < objects.size(); ++j)

|

||||

@@ -284,7 +285,6 @@ BackendInterface::fetchLedgerPage(

|

||||

msg << " - " << ripple::strHex(keys[j]);

|

||||

}

|

||||

BOOST_LOG_TRIVIAL(error) << __func__ << msg.str();

|

||||

assert(false);

|

||||

}

|

||||

}

|

||||

if (keys.size() && !reachedEnd)

|

||||

|

||||

@@ -162,12 +162,12 @@ public:

|

||||

std::vector<ripple::uint256> const& hashes,

|

||||

boost::asio::yield_context& yield) const = 0;

|

||||

|

||||

virtual AccountTransactions

|

||||

virtual TransactionsAndCursor

|

||||

fetchAccountTransactions(

|

||||

ripple::AccountID const& account,

|

||||

std::uint32_t const limit,

|

||||

bool forward,

|

||||

std::optional<AccountTransactionsCursor> const& cursor,

|

||||

std::optional<TransactionsCursor> const& cursor,

|

||||

boost::asio::yield_context& yield) const = 0;

|

||||

|

||||

virtual std::vector<TransactionAndMetadata>

|

||||

@@ -180,6 +180,21 @@ public:

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const = 0;

|

||||

|

||||

// *** NFT methods

|

||||

virtual std::optional<NFT>

|

||||

fetchNFT(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const = 0;

|

||||

|

||||

virtual TransactionsAndCursor

|

||||

fetchNFTTransactions(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const limit,

|

||||

bool const forward,

|

||||

std::optional<TransactionsCursor> const& cursorIn,

|

||||

boost::asio::yield_context& yield) const = 0;

|

||||

|

||||

// *** state data methods

|

||||

std::optional<Blob>

|

||||

fetchLedgerObject(

|

||||

@@ -285,9 +300,15 @@ public:

|

||||

std::string&& transaction,

|

||||

std::string&& metadata) = 0;

|

||||

|

||||

virtual void

|

||||

writeNFTs(std::vector<NFTsData>&& data) = 0;

|

||||

|

||||

virtual void

|

||||

writeAccountTransactions(std::vector<AccountTransactionsData>&& data) = 0;

|

||||

|

||||

virtual void

|

||||

writeNFTTransactions(std::vector<NFTTransactionsData>&& data) = 0;

|

||||

|

||||

virtual void

|

||||

writeSuccessor(

|

||||

std::string&& key,

|

||||

|

||||

@@ -1,7 +1,9 @@

|

||||

#include <ripple/app/tx/impl/details/NFTokenUtils.h>

|

||||

#include <backend/CassandraBackend.h>

|

||||

#include <backend/DBHelpers.h>

|

||||

#include <functional>

|

||||

#include <unordered_map>

|

||||

|

||||

namespace Backend {

|

||||

|

||||

// Type alias for async completion handlers

|

||||

@@ -256,6 +258,7 @@ CassandraBackend::writeLedger(

|

||||

"ledger_hash");

|

||||

ledgerSequence_ = ledgerInfo.seq;

|

||||

}

|

||||

|

||||

void

|

||||

CassandraBackend::writeAccountTransactions(

|

||||

std::vector<AccountTransactionsData>&& data)

|

||||

@@ -266,11 +269,11 @@ CassandraBackend::writeAccountTransactions(

|

||||

{

|

||||

makeAndExecuteAsyncWrite(

|

||||

this,

|

||||

std::move(std::make_tuple(

|

||||

std::make_tuple(

|

||||

std::move(account),

|

||||

record.ledgerSequence,

|

||||

record.transactionIndex,

|

||||

record.txHash)),

|

||||

record.txHash),

|

||||

[this](auto& params) {

|

||||

CassandraStatement statement(insertAccountTx_);

|

||||

auto& [account, lgrSeq, txnIdx, hash] = params.data;

|

||||

@@ -283,6 +286,31 @@ CassandraBackend::writeAccountTransactions(

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void

|

||||

CassandraBackend::writeNFTTransactions(std::vector<NFTTransactionsData>&& data)

|

||||

{

|

||||

for (NFTTransactionsData const& record : data)

|

||||

{

|

||||

makeAndExecuteAsyncWrite(

|

||||

this,

|

||||

std::make_tuple(

|

||||

record.tokenID,

|

||||

record.ledgerSequence,

|

||||

record.transactionIndex,

|

||||

record.txHash),

|

||||

[this](auto const& params) {

|

||||

CassandraStatement statement(insertNFTTx_);

|

||||

auto const& [tokenID, lgrSeq, txnIdx, txHash] = params.data;

|

||||

statement.bindNextBytes(tokenID);

|

||||

statement.bindNextIntTuple(lgrSeq, txnIdx);

|

||||

statement.bindNextBytes(txHash);

|

||||

return statement;

|

||||

},

|

||||

"nf_token_transactions");

|

||||

}

|

||||

}

|

||||

|

||||

void

|

||||

CassandraBackend::writeTransaction(

|

||||

std::string&& hash,

|

||||

@@ -325,6 +353,43 @@ CassandraBackend::writeTransaction(

|

||||

"transaction");

|

||||

}

|

||||

|

||||

void

|

||||

CassandraBackend::writeNFTs(std::vector<NFTsData>&& data)

|

||||

{

|

||||

for (NFTsData const& record : data)

|

||||

{

|

||||

makeAndExecuteAsyncWrite(

|

||||

this,

|

||||

std::make_tuple(

|

||||

record.tokenID,

|

||||

record.ledgerSequence,

|

||||

record.owner,

|

||||

record.isBurned),

|

||||

[this](auto const& params) {

|

||||

CassandraStatement statement{insertNFT_};

|

||||

auto const& [tokenID, lgrSeq, owner, isBurned] = params.data;

|

||||

statement.bindNextBytes(tokenID);

|

||||

statement.bindNextInt(lgrSeq);

|

||||

statement.bindNextBytes(owner);

|

||||

statement.bindNextBoolean(isBurned);

|

||||

return statement;

|

||||

},

|

||||

"nf_tokens");

|

||||

|

||||

makeAndExecuteAsyncWrite(

|

||||

this,

|

||||

std::make_tuple(record.tokenID),

|

||||

[this](auto const& params) {

|

||||

CassandraStatement statement{insertIssuerNFT_};

|

||||

auto const& [tokenID] = params.data;

|

||||

statement.bindNextBytes(ripple::nft::getIssuer(tokenID));

|

||||

statement.bindNextBytes(tokenID);

|

||||

return statement;

|

||||

},

|

||||

"issuer_nf_tokens");

|

||||

}

|

||||

}

|

||||

|

||||

std::optional<LedgerRange>

|

||||

CassandraBackend::hardFetchLedgerRange(boost::asio::yield_context& yield) const

|

||||

{

|

||||

@@ -502,12 +567,113 @@ CassandraBackend::fetchAllTransactionHashesInLedger(

|

||||

return hashes;

|

||||

}

|

||||

|

||||

AccountTransactions

|

||||

std::optional<NFT>

|

||||

CassandraBackend::fetchNFT(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

CassandraStatement statement{selectNFT_};

|

||||

statement.bindNextBytes(tokenID);

|

||||

statement.bindNextInt(ledgerSequence);

|

||||

CassandraResult response = executeAsyncRead(statement, yield);

|

||||

if (!response)

|

||||

return {};

|

||||

|

||||

NFT result;

|

||||

result.tokenID = tokenID;

|

||||

result.ledgerSequence = response.getUInt32();

|

||||

result.owner = response.getBytes();

|

||||

result.isBurned = response.getBool();

|

||||

return result;

|

||||

}

|

||||

|

||||

TransactionsAndCursor

|

||||

CassandraBackend::fetchNFTTransactions(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const limit,

|

||||

bool const forward,

|

||||

std::optional<TransactionsCursor> const& cursorIn,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

auto cursor = cursorIn;

|

||||

auto rng = fetchLedgerRange();

|

||||

if (!rng)

|

||||

return {{}, {}};

|

||||

|

||||

CassandraStatement statement = forward

|

||||

? CassandraStatement(selectNFTTxForward_)

|

||||

: CassandraStatement(selectNFTTx_);

|

||||

|

||||

statement.bindNextBytes(tokenID);

|

||||

|

||||

if (cursor)

|

||||

{

|

||||

statement.bindNextIntTuple(

|

||||

cursor->ledgerSequence, cursor->transactionIndex);

|

||||

BOOST_LOG_TRIVIAL(debug) << " token_id = " << ripple::strHex(tokenID)

|

||||

<< " tuple = " << cursor->ledgerSequence

|

||||

<< " : " << cursor->transactionIndex;

|

||||

}

|

||||

else

|

||||

{

|

||||

int const seq = forward ? rng->minSequence : rng->maxSequence;

|

||||

int const placeHolder =

|

||||

forward ? 0 : std::numeric_limits<std::uint32_t>::max();

|

||||

|

||||

statement.bindNextIntTuple(placeHolder, placeHolder);

|

||||

BOOST_LOG_TRIVIAL(debug)

|

||||

<< " token_id = " << ripple::strHex(tokenID) << " idx = " << seq

|

||||

<< " tuple = " << placeHolder;

|

||||

}

|

||||

|

||||

statement.bindNextUInt(limit);

|

||||

|

||||

CassandraResult result = executeAsyncRead(statement, yield);

|

||||

|

||||

if (!result.hasResult())

|

||||

{

|

||||

BOOST_LOG_TRIVIAL(debug) << __func__ << " - no rows returned";

|

||||

return {};

|

||||

}

|

||||

|

||||

std::vector<ripple::uint256> hashes = {};

|

||||

auto numRows = result.numRows();

|

||||

BOOST_LOG_TRIVIAL(info) << "num_rows = " << numRows;

|

||||

do

|

||||

{

|

||||

hashes.push_back(result.getUInt256());

|

||||

if (--numRows == 0)

|

||||

{

|

||||

BOOST_LOG_TRIVIAL(debug) << __func__ << " setting cursor";

|

||||

auto const [lgrSeq, txnIdx] = result.getInt64Tuple();

|

||||

cursor = {

|

||||

static_cast<std::uint32_t>(lgrSeq),

|

||||

static_cast<std::uint32_t>(txnIdx)};

|

||||

|

||||

if (forward)

|

||||

++cursor->transactionIndex;

|

||||

}

|

||||

} while (result.nextRow());

|

||||

|

||||

auto txns = fetchTransactions(hashes, yield);

|

||||

BOOST_LOG_TRIVIAL(debug) << __func__ << " txns = " << txns.size();

|

||||

|

||||

if (txns.size() == limit)

|

||||

{

|

||||

BOOST_LOG_TRIVIAL(debug) << __func__ << " returning cursor";

|

||||

return {txns, cursor};

|

||||

}

|

||||

|

||||

return {txns, {}};

|

||||

}

|

||||

|

||||

TransactionsAndCursor

|

||||

CassandraBackend::fetchAccountTransactions(

|

||||

ripple::AccountID const& account,

|

||||

std::uint32_t const limit,

|

||||

bool const forward,

|

||||

std::optional<AccountTransactionsCursor> const& cursorIn,

|

||||

std::optional<TransactionsCursor> const& cursorIn,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

auto rng = fetchLedgerRange();

|

||||

@@ -535,8 +701,8 @@ CassandraBackend::fetchAccountTransactions(

|

||||

}

|

||||

else

|

||||

{

|

||||

int seq = forward ? rng->minSequence : rng->maxSequence;

|

||||

int placeHolder =

|

||||

int const seq = forward ? rng->minSequence : rng->maxSequence;

|

||||

int const placeHolder =

|

||||

forward ? 0 : std::numeric_limits<std::uint32_t>::max();

|

||||

|

||||

statement.bindNextIntTuple(placeHolder, placeHolder);

|

||||

@@ -584,6 +750,7 @@ CassandraBackend::fetchAccountTransactions(

|

||||

|

||||

return {txns, {}};

|

||||

}

|

||||

|

||||

std::optional<ripple::uint256>

|

||||

CassandraBackend::doFetchSuccessorKey(

|

||||

ripple::uint256 key,

|

||||

@@ -1179,6 +1346,64 @@ CassandraBackend::open(bool readOnly)

|

||||

<< " LIMIT 1";

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "CREATE TABLE IF NOT EXISTS " << tablePrefix << "nf_tokens"

|

||||

<< " ("

|

||||

<< " token_id blob,"

|

||||

<< " sequence bigint,"

|

||||

<< " owner blob,"

|

||||

<< " is_burned boolean,"

|

||||

<< " PRIMARY KEY (token_id, sequence)"

|

||||

<< " )"

|

||||

<< " WITH CLUSTERING ORDER BY (sequence DESC)"

|

||||

<< " AND default_time_to_live = " << ttl;

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT * FROM " << tablePrefix << "nf_tokens"

|

||||

<< " LIMIT 1";

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "CREATE TABLE IF NOT EXISTS " << tablePrefix

|

||||

<< "issuer_nf_tokens"

|

||||

<< " ("

|

||||

<< " issuer blob,"

|

||||

<< " token_id blob,"

|

||||

<< " PRIMARY KEY (issuer, token_id)"

|

||||

<< " )";

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT * FROM " << tablePrefix << "issuer_nf_tokens"

|

||||

<< " LIMIT 1";

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "CREATE TABLE IF NOT EXISTS " << tablePrefix

|

||||

<< "nf_token_transactions"

|

||||

<< " ("

|

||||

<< " token_id blob,"

|

||||

<< " seq_idx tuple<bigint, bigint>,"

|

||||

<< " hash blob,"

|

||||

<< " PRIMARY KEY (token_id, seq_idx)"

|

||||

<< " )"

|

||||

<< " WITH CLUSTERING ORDER BY (seq_idx DESC)"

|

||||

<< " AND default_time_to_live = " << ttl;

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT * FROM " << tablePrefix << "nf_token_transactions"

|

||||

<< " LIMIT 1";

|

||||

if (!executeSimpleStatement(query.str()))

|

||||

continue;

|

||||

|

||||

setupSessionAndTable = true;

|

||||

}

|

||||

|

||||

@@ -1296,6 +1521,57 @@ CassandraBackend::open(bool readOnly)

|

||||

if (!selectAccountTxForward_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "INSERT INTO " << tablePrefix << "nf_tokens"

|

||||

<< " (token_id,sequence,owner,is_burned)"

|

||||

<< " VALUES (?,?,?,?)";

|

||||

if (!insertNFT_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT sequence,owner,is_burned"

|

||||

<< " FROM " << tablePrefix << "nf_tokens WHERE"

|

||||

<< " token_id = ? AND"

|

||||

<< " sequence <= ?"

|

||||

<< " ORDER BY sequence DESC"

|

||||

<< " LIMIT 1";

|

||||

if (!selectNFT_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "INSERT INTO " << tablePrefix << "issuer_nf_tokens"

|

||||

<< " (issuer,token_id)"

|

||||

<< " VALUES (?,?)";

|

||||

if (!insertIssuerNFT_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "INSERT INTO " << tablePrefix << "nf_token_transactions"

|

||||

<< " (token_id,seq_idx,hash)"

|

||||

<< " VALUES (?,?,?)";

|

||||

if (!insertNFTTx_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT hash,seq_idx"

|

||||

<< " FROM " << tablePrefix << "nf_token_transactions WHERE"

|

||||

<< " token_id = ? AND"

|

||||

<< " seq_idx < ?"

|

||||

<< " ORDER BY seq_idx DESC"

|

||||

<< " LIMIT ?";

|

||||

if (!selectNFTTx_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << "SELECT hash,seq_idx"

|

||||

<< " FROM " << tablePrefix << "nf_token_transactions WHERE"

|

||||

<< " token_id = ? AND"

|

||||

<< " seq_idx >= ?"

|

||||

<< " ORDER BY seq_idx ASC"

|

||||

<< " LIMIT ?";

|

||||

if (!selectNFTTxForward_.prepareStatement(query, session_.get()))

|

||||

continue;

|

||||

|

||||

query.str("");

|

||||

query << " INSERT INTO " << tablePrefix << "ledgers "

|

||||

<< " (sequence, header) VALUES(?,?)";

|

||||

|

||||

@@ -115,7 +115,7 @@ public:

|

||||

throw std::runtime_error(

|

||||

"CassandraStatement::bindNextBoolean - statement_ is null");

|

||||

CassError rc = cass_statement_bind_bool(

|

||||

statement_, 1, static_cast<cass_bool_t>(val));

|

||||

statement_, curBindingIndex_, static_cast<cass_bool_t>(val));

|

||||

if (rc != CASS_OK)

|

||||

{

|

||||

std::stringstream ss;

|

||||

@@ -481,6 +481,33 @@ public:

|

||||

return {first, second};

|

||||

}

|

||||

|

||||

// TODO: should be replaced with a templated implementation as is very

|

||||

// similar to other getters

|

||||

bool

|

||||

getBool()

|

||||

{

|

||||

if (!row_)

|

||||

{

|

||||

std::stringstream msg;

|

||||

msg << __func__ << " - no result";

|

||||

BOOST_LOG_TRIVIAL(error) << msg.str();

|

||||

throw std::runtime_error(msg.str());

|

||||

}

|

||||

cass_bool_t val;

|

||||

CassError rc =

|

||||

cass_value_get_bool(cass_row_get_column(row_, curGetIndex_), &val);

|

||||

if (rc != CASS_OK)

|

||||

{

|

||||

std::stringstream msg;

|

||||

msg << __func__ << " - error getting value: " << rc << ", "

|

||||

<< cass_error_desc(rc);

|

||||

BOOST_LOG_TRIVIAL(error) << msg.str();

|

||||

throw std::runtime_error(msg.str());

|

||||

}

|

||||

++curGetIndex_;

|

||||

return val;

|

||||

}

|

||||

|

||||

~CassandraResult()

|

||||

{

|

||||

if (result_ != nullptr)

|

||||

@@ -599,6 +626,12 @@ private:

|

||||

CassandraPreparedStatement insertAccountTx_;

|

||||

CassandraPreparedStatement selectAccountTx_;

|

||||

CassandraPreparedStatement selectAccountTxForward_;

|

||||

CassandraPreparedStatement insertNFT_;

|

||||

CassandraPreparedStatement selectNFT_;

|

||||

CassandraPreparedStatement insertIssuerNFT_;

|

||||

CassandraPreparedStatement insertNFTTx_;

|

||||

CassandraPreparedStatement selectNFTTx_;

|

||||

CassandraPreparedStatement selectNFTTxForward_;

|

||||

CassandraPreparedStatement insertLedgerHeader_;

|

||||

CassandraPreparedStatement insertLedgerHash_;

|

||||

CassandraPreparedStatement updateLedgerRange_;

|

||||

@@ -683,12 +716,12 @@ public:

|

||||

open_ = false;

|

||||

}

|

||||

|

||||

AccountTransactions

|

||||

TransactionsAndCursor

|

||||

fetchAccountTransactions(

|

||||

ripple::AccountID const& account,

|

||||

std::uint32_t const limit,

|

||||

bool forward,

|

||||

std::optional<AccountTransactionsCursor> const& cursor,

|

||||

std::optional<TransactionsCursor> const& cursor,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

bool

|

||||

@@ -852,6 +885,20 @@ public:

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

std::optional<NFT>

|

||||

fetchNFT(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

TransactionsAndCursor

|

||||

fetchNFTTransactions(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const limit,

|

||||

bool const forward,

|

||||

std::optional<TransactionsCursor> const& cursorIn,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

// Synchronously fetch the object with key key, as of ledger with sequence

|

||||

// sequence

|

||||

std::optional<Blob>

|

||||

@@ -941,6 +988,9 @@ public:

|

||||

writeAccountTransactions(

|

||||

std::vector<AccountTransactionsData>&& data) override;

|

||||

|

||||

void

|

||||

writeNFTTransactions(std::vector<NFTTransactionsData>&& data) override;

|

||||

|

||||

void

|

||||

writeTransaction(

|

||||

std::string&& hash,

|

||||

@@ -949,6 +999,9 @@ public:

|

||||

std::string&& transaction,

|

||||

std::string&& metadata) override;

|

||||

|

||||

void

|

||||

writeNFTs(std::vector<NFTsData>&& data) override;

|

||||

|

||||

void

|

||||

startWrites() const override

|

||||

{

|

||||

|

||||

@@ -9,8 +9,8 @@

|

||||

#include <backend/Pg.h>

|

||||

#include <backend/Types.h>

|

||||

|

||||

/// Struct used to keep track of what to write to transactions and

|

||||

/// account_transactions tables in Postgres

|

||||

/// Struct used to keep track of what to write to

|

||||

/// account_transactions/account_tx tables

|

||||

struct AccountTransactionsData

|

||||

{

|

||||

boost::container::flat_set<ripple::AccountID> accounts;

|

||||

@@ -32,6 +32,57 @@ struct AccountTransactionsData

|

||||

AccountTransactionsData() = default;

|

||||

};

|

||||

|

||||

/// Represents a link from a tx to an NFT that was targeted/modified/created

|

||||

/// by it. Gets written to nf_token_transactions table and the like.

|

||||

struct NFTTransactionsData

|

||||

{

|

||||

ripple::uint256 tokenID;

|

||||

std::uint32_t ledgerSequence;

|

||||

std::uint32_t transactionIndex;

|

||||

ripple::uint256 txHash;

|

||||

|

||||

NFTTransactionsData(

|

||||

ripple::uint256 const& tokenID,

|

||||

ripple::TxMeta const& meta,

|

||||

ripple::uint256 const& txHash)

|

||||

: tokenID(tokenID)

|

||||

, ledgerSequence(meta.getLgrSeq())

|

||||

, transactionIndex(meta.getIndex())

|

||||

, txHash(txHash)

|

||||

{

|

||||

}

|

||||

};

|

||||

|

||||

/// Represents an NFT state at a particular ledger. Gets written to nf_tokens

|

||||

/// table and the like.

|

||||

struct NFTsData

|

||||

{

|

||||

ripple::uint256 tokenID;

|

||||

std::uint32_t ledgerSequence;

|

||||

|

||||

// The transaction index is only stored because we want to store only the

|

||||

// final state of an NFT per ledger. Since we pull this from transactions

|

||||

// we keep track of which tx index created this so we can de-duplicate, as

|

||||

// it is possible for one ledger to have multiple txs that change the

|

||||

// state of the same NFT.

|

||||

std::uint32_t transactionIndex;

|

||||

ripple::AccountID owner;

|

||||

bool isBurned;

|

||||

|

||||

NFTsData(

|

||||

ripple::uint256 const& tokenID,

|

||||

ripple::AccountID const& owner,

|

||||

ripple::TxMeta const& meta,

|

||||

bool isBurned)

|

||||

: tokenID(tokenID)

|

||||

, ledgerSequence(meta.getLgrSeq())

|

||||

, transactionIndex(meta.getIndex())

|

||||

, owner(owner)

|

||||

, isBurned(isBurned)

|

||||

{

|

||||

}

|

||||

};

|

||||

|

||||

template <class T>

|

||||

inline bool

|

||||

isOffer(T const& object)

|

||||

|

||||

@@ -1,110 +0,0 @@

|

||||

#include <backend/LayeredCache.h>

|

||||

namespace Backend {

|

||||

|

||||

void

|

||||

LayeredCache::insert(

|

||||

ripple::uint256 const& key,

|

||||

Blob const& value,

|

||||

uint32_t seq)

|

||||

{

|

||||

auto entry = map_[key];

|

||||

// stale insert, do nothing

|

||||

if (seq <= entry.recent.seq)

|

||||

return;

|

||||

entry.old = entry.recent;

|

||||

entry.recent = {seq, value};

|

||||

if (value.empty())

|

||||

pendingDeletes_.push_back(key);

|

||||

if (!entry.old.blob.empty())

|

||||

pendingSweeps_.push_back(key);

|

||||

}

|

||||

|

||||

std::optional<Blob>

|

||||

LayeredCache::select(CacheEntry const& entry, uint32_t seq) const

|

||||

{

|

||||

if (seq < entry.old.seq)

|

||||

return {};

|

||||

if (seq < entry.recent.seq && !entry.old.blob.empty())

|

||||

return entry.old.blob;

|

||||

if (!entry.recent.blob.empty())

|

||||

return entry.recent.blob;

|

||||

return {};

|

||||

}

|

||||

void

|

||||

LayeredCache::update(std::vector<LedgerObject> const& blobs, uint32_t seq)

|

||||

{

|

||||

std::unique_lock lck{mtx_};

|

||||

if (seq > mostRecentSequence_)

|

||||

mostRecentSequence_ = seq;

|

||||

for (auto const& k : pendingSweeps_)

|

||||

{

|

||||

auto e = map_[k];

|

||||

e.old = {};

|

||||

}

|

||||

for (auto const& k : pendingDeletes_)

|

||||

{

|

||||

map_.erase(k);

|

||||

}

|

||||

for (auto const& b : blobs)

|

||||

{

|

||||

insert(b.key, b.blob, seq);

|

||||

}

|

||||

}

|

||||

std::optional<LedgerObject>

|

||||

LayeredCache::getSuccessor(ripple::uint256 const& key, uint32_t seq) const

|

||||

{

|

||||

ripple::uint256 curKey = key;

|

||||

while (true)

|

||||

{

|

||||

std::shared_lock lck{mtx_};

|

||||

if (seq < mostRecentSequence_ - 1)

|

||||

return {};

|

||||

auto e = map_.upper_bound(curKey);

|

||||

if (e == map_.end())

|

||||

return {};

|

||||

auto const& entry = e->second;

|

||||

auto blob = select(entry, seq);

|

||||

if (!blob)

|

||||

{

|

||||

curKey = e->first;

|

||||

continue;

|

||||

}

|

||||

else

|

||||

return {{e->first, *blob}};

|

||||

}

|

||||

}

|

||||

std::optional<LedgerObject>

|

||||

LayeredCache::getPredecessor(ripple::uint256 const& key, uint32_t seq) const

|

||||

{

|

||||

ripple::uint256 curKey = key;

|

||||

std::shared_lock lck{mtx_};

|

||||

while (true)

|

||||

{

|

||||

if (seq < mostRecentSequence_ - 1)

|

||||

return {};

|

||||

auto e = map_.lower_bound(curKey);

|

||||

--e;

|

||||

if (e == map_.begin())

|

||||

return {};

|

||||

auto const& entry = e->second;

|

||||

auto blob = select(entry, seq);

|

||||

if (!blob)

|

||||

{

|

||||

curKey = e->first;

|

||||

continue;

|

||||

}

|

||||

else

|

||||

return {{e->first, *blob}};

|

||||

}

|

||||

}

|

||||

std::optional<Blob>

|

||||

LayeredCache::get(ripple::uint256 const& key, uint32_t seq) const

|

||||

{

|

||||

std::shared_lock lck{mtx_};

|

||||

auto e = map_.find(key);

|

||||

if (e == map_.end())

|

||||

return {};

|

||||

auto const& entry = e->second;

|

||||

return select(entry, seq);

|

||||

}

|

||||

} // namespace Backend

|

||||

@@ -1,73 +0,0 @@

|

||||

#ifndef CLIO_LAYEREDCACHE_H_INCLUDED

|

||||

#define CLIO_LAYEREDCACHE_H_INCLUDED

|

||||

|

||||

#include <ripple/basics/base_uint.h>

|

||||

#include <backend/Types.h>

|

||||

#include <map>

|

||||

#include <mutex>

|

||||

#include <shared_mutex>

|

||||

#include <utility>

|

||||

#include <vector>

|

||||

namespace Backend {

|

||||

class LayeredCache

|

||||

{

|

||||

struct SeqBlobPair

|

||||

{

|

||||

uint32_t seq;

|

||||

Blob blob;

|

||||

};

|

||||

struct CacheEntry

|

||||

{

|

||||

SeqBlobPair recent;

|

||||

SeqBlobPair old;

|

||||

};

|

||||

|

||||

std::map<ripple::uint256, CacheEntry> map_;

|

||||

std::vector<ripple::uint256> pendingDeletes_;

|

||||

std::vector<ripple::uint256> pendingSweeps_;

|

||||

mutable std::shared_mutex mtx_;

|

||||

uint32_t mostRecentSequence_;

|

||||

|

||||

void

|

||||

insert(ripple::uint256 const& key, Blob const& value, uint32_t seq);

|

||||

|

||||

/*

|

||||

void

|

||||

insert(ripple::uint256 const& key, Blob const& value, uint32_t seq)

|

||||

{

|

||||

map_.emplace(key,{{seq,value,{}});

|

||||

}

|

||||

void

|

||||

update(ripple::uint256 const& key, Blob const& value, uint32_t seq)

|

||||

{

|

||||

auto& entry = map_.find(key);

|

||||

entry.old = entry.recent;

|

||||

entry.recent = {seq, value};

|

||||

pendingSweeps_.push_back(key);

|

||||

}

|

||||

void

|

||||

erase(ripple::uint256 const& key, uint32_t seq)

|

||||

{

|

||||

update(key, {}, seq);

|

||||

pendingDeletes_.push_back(key);

|

||||

}

|

||||

*/

|

||||

std::optional<Blob>

|

||||

select(CacheEntry const& entry, uint32_t seq) const;

|

||||

|

||||

public:

|

||||

void

|

||||

update(std::vector<LedgerObject> const& blobs, uint32_t seq);

|

||||

|

||||

std::optional<Blob>

|

||||

get(ripple::uint256 const& key, uint32_t seq) const;

|

||||

|

||||

std::optional<LedgerObject>

|

||||

getSuccessor(ripple::uint256 const& key, uint32_t seq) const;

|

||||

|

||||

std::optional<LedgerObject>

|

||||

getPredecessor(ripple::uint256 const& key, uint32_t seq) const;

|

||||

};

|

||||

|

||||

} // namespace Backend

|

||||

#endif

|

||||

@@ -2,6 +2,7 @@

|

||||

#include <boost/format.hpp>

|

||||

#include <backend/PostgresBackend.h>

|

||||

#include <thread>

|

||||

|

||||

namespace Backend {

|

||||

|

||||

// Type alias for async completion handlers

|

||||

@@ -77,6 +78,12 @@ PostgresBackend::writeAccountTransactions(

|

||||

}

|

||||

}

|

||||

|

||||

void

|

||||

PostgresBackend::writeNFTTransactions(std::vector<NFTTransactionsData>&& data)

|

||||

{

|

||||

throw std::runtime_error("Not implemented");

|

||||

}

|

||||

|

||||

void

|

||||

PostgresBackend::doWriteLedgerObject(

|

||||

std::string&& key,

|

||||

@@ -152,6 +159,12 @@ PostgresBackend::writeTransaction(

|

||||

<< '\t' << "\\\\x" << ripple::strHex(metadata) << '\n';

|

||||

}

|

||||

|

||||

void

|

||||

PostgresBackend::writeNFTs(std::vector<NFTsData>&& data)

|

||||

{

|

||||

throw std::runtime_error("Not implemented");

|

||||

}

|

||||

|

||||

std::uint32_t

|

||||

checkResult(PgResult const& res, std::uint32_t const numFieldsExpected)

|

||||

{

|

||||

@@ -419,6 +432,15 @@ PostgresBackend::fetchAllTransactionHashesInLedger(

|

||||

return {};

|

||||

}

|

||||

|

||||

std::optional<NFT>

|

||||

PostgresBackend::fetchNFT(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

throw std::runtime_error("Not implemented");

|

||||

}

|

||||

|

||||

std::optional<ripple::uint256>

|

||||

PostgresBackend::doFetchSuccessorKey(

|

||||

ripple::uint256 key,

|

||||

@@ -637,12 +659,25 @@ PostgresBackend::fetchLedgerDiff(

|

||||

return {};

|

||||

}

|

||||

|

||||

AccountTransactions

|

||||

// TODO this implementation and fetchAccountTransactions should be

|

||||

// generalized

|

||||

TransactionsAndCursor

|

||||

PostgresBackend::fetchNFTTransactions(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const limit,

|

||||

bool forward,

|

||||

std::optional<TransactionsCursor> const& cursor,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

throw std::runtime_error("Not implemented");

|

||||

}

|

||||

|

||||

TransactionsAndCursor

|

||||

PostgresBackend::fetchAccountTransactions(

|

||||

ripple::AccountID const& account,

|

||||

std::uint32_t const limit,

|

||||

bool forward,

|

||||

std::optional<AccountTransactionsCursor> const& cursor,

|

||||

std::optional<TransactionsCursor> const& cursor,

|

||||

boost::asio::yield_context& yield) const

|

||||

{

|

||||

PgQuery pgQuery(pgPool_);

|

||||

|

||||

@@ -62,6 +62,20 @@ public:

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

std::optional<NFT>

|

||||

fetchNFT(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const ledgerSequence,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

TransactionsAndCursor

|

||||

fetchNFTTransactions(

|

||||

ripple::uint256 const& tokenID,

|

||||

std::uint32_t const limit,

|

||||

bool const forward,

|

||||

std::optional<TransactionsCursor> const& cursorIn,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

std::vector<LedgerObject>

|

||||

fetchLedgerDiff(

|

||||

std::uint32_t const ledgerSequence,

|

||||

@@ -87,12 +101,12 @@ public:

|

||||

std::uint32_t const sequence,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

AccountTransactions

|

||||

TransactionsAndCursor

|

||||

fetchAccountTransactions(

|

||||

ripple::AccountID const& account,

|

||||

std::uint32_t const limit,

|

||||

bool forward,

|

||||

std::optional<AccountTransactionsCursor> const& cursor,

|

||||

std::optional<TransactionsCursor> const& cursor,

|

||||

boost::asio::yield_context& yield) const override;

|

||||

|

||||

void

|

||||

@@ -120,10 +134,16 @@ public:

|

||||

std::string&& transaction,

|

||||

std::string&& metadata) override;

|

||||

|

||||

void

|

||||

writeNFTs(std::vector<NFTsData>&& data) override;

|

||||

|

||||

void

|

||||

writeAccountTransactions(

|

||||

std::vector<AccountTransactionsData>&& data) override;

|

||||

|

||||

void

|

||||

writeNFTTransactions(std::vector<NFTTransactionsData>&& data) override;

|

||||

|

||||

void

|

||||

open(bool readOnly) override;

|

||||

|

||||

|

||||

@@ -1,174 +1,132 @@

|

||||

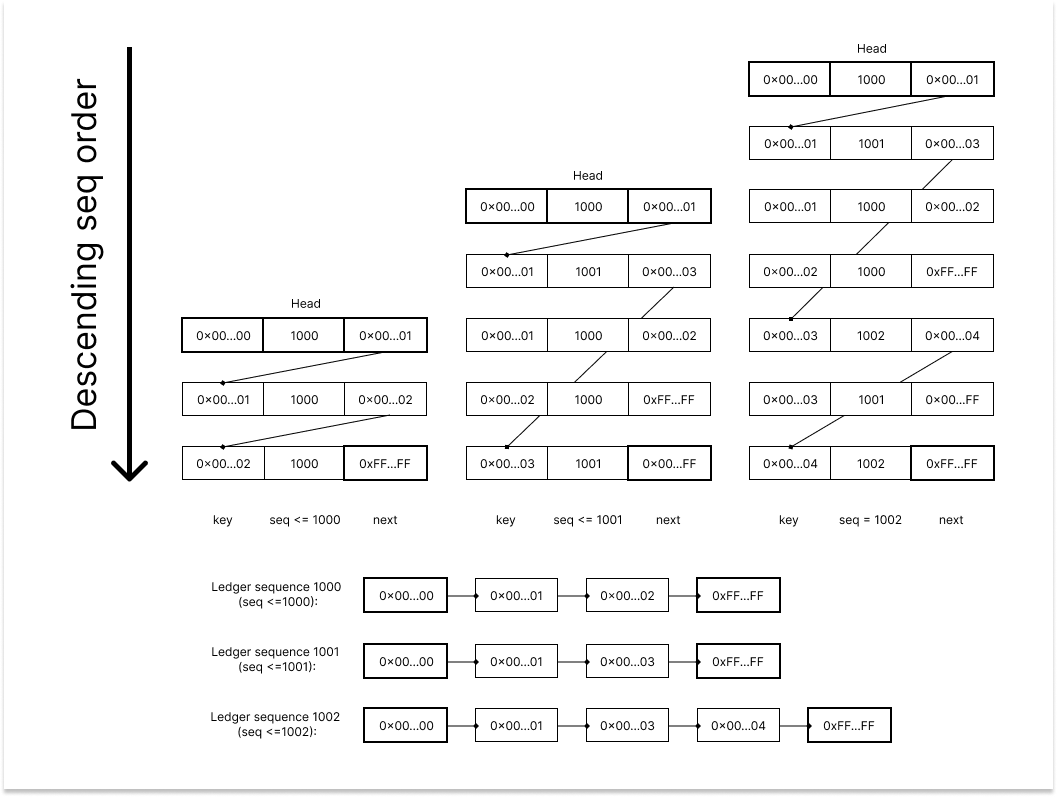

The data model used by clio is different than that used by rippled.

|

||||

rippled uses what is known as a SHAMap, which is a tree structure, with

|

||||

actual ledger and transaction data at the leaves of the tree. Looking up a record

|

||||

is a tree traversal, where the key is used to determine the path to the proper

|

||||

leaf node. The path from root to leaf is used as a proof-tree on the p2p network,

|

||||

where nodes can prove that a piece of data is present in a ledger by sending

|

||||

the path from root to leaf. Other nodes can verify this path and be certain

|

||||

that the data does actually exist in the ledger in question.

|

||||

# Clio Backend

|

||||

## Background

|

||||

The backend of Clio is responsible for handling the proper reading and writing of past ledger data from and to a given database. As of right now, Cassandra is the only supported database that is production-ready. However, support for more databases like PostgreSQL and DynamoDB may be added in future versions. Support for database types can be easily extended by creating new implementations which implements the virtual methods of `BackendInterface.h`. Then, use the Factory Object Design Pattern to simply add logic statements to `BackendFactory.h` that return the new database interface for a specific `type` in Clio's configuration file.

|

||||

|

||||

clio instead flattens the data model, so lookups are O(1). This results in time

|

||||

and space savings. This is possible because clio does not participate in the peer

|

||||

to peer protocol, and thus does not need to verify any data. clio fully trusts the

|

||||

rippled nodes that are being used as a data source.

|

||||

## Data Model

|

||||

The data model used by Clio to read and write ledger data is different from what Rippled uses. Rippled uses a novel data structure named [*SHAMap*](https://github.com/ripple/rippled/blob/master/src/ripple/shamap/README.md), which is a combination of a Merkle Tree and a Radix Trie. In a SHAMap, ledger objects are stored in the root vertices of the tree. Thus, looking up a record located at the leaf node of the SHAMap executes a tree search, where the path from the root node to the leaf node is the key of the record. Rippled nodes can also generate a proof-tree by forming a subtree with all the path nodes and their neighbors, which can then be used to prove the existnce of the leaf node data to other Rippled nodes. In short, the main purpose of the SHAMap data structure is to facilitate the fast validation of data integrity between different decentralized Rippled nodes.

|

||||

|

||||

clio uses certain features of database query languages to make this happen. Many

|

||||

databases provide the necessary features to implement the clio data model. At the

|

||||

time of writing, the data model is implemented in PostgreSQL and CQL (the query

|

||||

language used by Apache Cassandra and ScyllaDB).

|

||||

Since Clio only extracts past validated ledger data from a group of trusted Rippled nodes, it can be safely assumed that these ledger data are correct without the need to validate with other nodes in the XRP peer-to-peer network. Because of this, Clio is able to use a flattened data model to store the past validated ledger data, which allows for direct record lookup with much faster constant time operations.

|

||||

|

||||

The below examples are a sort of pseudo query language

|

||||

There are three main types of data in each XRP ledger version, they are [Ledger Header](https://xrpl.org/ledger-header.html), [Transaction Set](https://xrpl.org/transaction-formats.html) and [State Data](https://xrpl.org/ledger-object-types.html). Due to the structural differences of the different types of databases, Clio may choose to represent these data using a different schema for each unique database type.

|

||||

|

||||

## Ledgers

|

||||

**Keywords**

|

||||

*Sequence*: A unique incrementing identification number used to label the different ledger versions.

|

||||

*Hash*: The SHA512-half (calculate SHA512 and take the first 256 bits) hash of various ledger data like the entire ledger or specific ledger objects.

|

||||

*Ledger Object*: The [binary-encoded](https://xrpl.org/serialization.html) STObject containing specific data (i.e. metadata, transaction data).

|

||||

*Metadata*: The data containing [detailed information](https://xrpl.org/transaction-metadata.html#transaction-metadata) of the outcome of a specific transaction, regardless of whether the transaction was successful.

|

||||

*Transaction data*: The data containing the [full details](https://xrpl.org/transaction-common-fields.html) of a specific transaction.

|

||||

*Object Index*: The pseudo-random unique identifier of a ledger object, created by hashing the data of the object.

|

||||

|

||||

We store ledger headers in a ledgers table. In PostgreSQL, we store

|

||||

the headers in their deserialized form, so we can look up by sequence or hash.

|

||||

## Cassandra Implementation

|

||||

Cassandra is a distributed wide-column NoSQL database designed to handle large data throughput with high availability and no single point of failure. By leveraging Cassandra, Clio will be able to quickly and reliably scale up when needed simply by adding more Cassandra nodes to the Cassandra cluster configuration.

|

||||

|

||||

In Cassandra, we store the headers as blobs. The primary table maps a ledger sequence

|

||||

to the blob, and a secondary table maps a ledger hash to a ledger sequence.

|

||||

In Cassandra, Clio will be creating 9 tables to store the ledger data, they are `ledger_transactions`, `transactions`, `ledger_hashes`, `ledger_range`, `objects`, `ledgers`, `diff`, `account_tx`, and `successor`. Their schemas and how they work are detailed below.

|

||||

|

||||

## Transactions

|

||||

Transactions are stored in a very basic table, with a schema like so:

|

||||

*Note, if you would like visually explore the data structure of the Cassandra database, you can first run Clio server with database `type` configured as `cassandra` to fill ledger data from Rippled nodes into Cassandra, then use a GUI database management tool like [Datastax's Opcenter](https://docs.datastax.com/en/install/6.0/install/opscInstallOpsc.html) to interactively view it.*

|

||||

|

||||

|

||||

### `ledger_transactions`

|

||||

```

|

||||

CREATE TABLE transactions (

|

||||

hash blob,

|

||||

ledger_sequence int,

|

||||

transaction blob,

|

||||

PRIMARY KEY(hash))

|

||||

CREATE TABLE clio.ledger_transactions (

|

||||

ledger_sequence bigint, # The sequence number of the ledger version

|

||||

hash blob, # Hash of all the transactions on this ledger version

|

||||

PRIMARY KEY (ledger_sequence, hash)

|

||||

) WITH CLUSTERING ORDER BY (hash ASC) ...

|

||||

```

|

||||

This table stores the hashes of all transactions in a given ledger sequence ordered by the hash value in ascending order.

|

||||

|

||||

### `transactions`

|

||||

```

|

||||

The primary key is the hash.

|

||||

CREATE TABLE clio.transactions (

|

||||

hash blob PRIMARY KEY, # The transaction hash

|

||||

date bigint, # Date of the transaction

|

||||

ledger_sequence bigint, # The sequence that the transaction was validated

|

||||

metadata blob, # Metadata of the transaction

|

||||

transaction blob # Data of the transaction

|

||||

) ...

|

||||

```

|

||||

This table stores the full transaction and metadata of each ledger version with the transaction hash as the primary key.

|

||||

|

||||

A common query pattern is fetching all transactions in a ledger. In PostgreSQL,

|

||||

nothing special is needed for this. We just query:

|

||||

To look up all the transactions that were validated in a ledger version with sequence `n`, one can first get the all the transaction hashes in that ledger version by querying `SELECT * FROM ledger_transactions WHERE ledger_sequence = n;`. Then, iterate through the list of hashes and query `SELECT * FROM transactions WHERE hash = one_of_the_hash_from_the_list;` to get the detailed transaction data.

|

||||

|

||||

### `ledger_hashes`

|

||||

```

|

||||

SELECT * FROM transactions WHERE ledger_sequence = s;

|

||||

CREATE TABLE clio.ledger_hashes (

|

||||

hash blob PRIMARY KEY, # Hash of entire ledger version's data

|

||||

sequence bigint # The sequence of the ledger version

|

||||

) ...

|

||||

```

|

||||

This table stores the hash of all ledger versions by their sequences.

|

||||

### `ledger_range`

|

||||

```

|

||||

Cassandra doesn't handle queries like this well, since `ledger_sequence` is not

|

||||

the primary key, so we use a second table that maps a ledger sequence number

|

||||

to all of the hashes in that ledger:

|

||||

CREATE TABLE clio.ledger_range (

|

||||

is_latest boolean PRIMARY KEY, # Whether this sequence is the stopping range

|

||||

sequence bigint # The sequence number of the starting/stopping range

|

||||

) ...

|

||||

```

|

||||

This table marks the range of ledger versions that is stored on this specific Cassandra node. Because of its nature, there are only two records in this table with `false` and `true` values for `is_latest`, marking the starting and ending sequence of the ledger range.

|

||||

|

||||

### `objects`

|

||||

```

|

||||

CREATE TABLE transaction_hashes (

|

||||

ledger_sequence int,

|

||||

hash blob,

|

||||

PRIMARY KEY(ledger_sequence, blob))

|

||||

CREATE TABLE clio.objects (

|

||||

key blob, # Object index of the object

|

||||

sequence bigint, # The sequence this object was last updated

|

||||

object blob, # Data of the object

|

||||

PRIMARY KEY (key, sequence)

|

||||

) WITH CLUSTERING ORDER BY (sequence DESC) ...

|

||||

```

|

||||

This table stores the specific data of all objects that ever existed on the XRP network, even if they are deleted (which is represented with a special `0x` value). The records are ordered by descending sequence, where the newest validated ledger objects are at the top.

|

||||

|

||||

This table is updated when all data for a given ledger sequence has been written to the various tables in the database. For each ledger, many associated records are written to different tables. This table is used as a synchronization mechanism, to prevent the application from reading data from a ledger for which all data has not yet been fully written.

|

||||

|

||||

### `ledgers`

|

||||

```

|

||||

This table uses a compound primary key, so we can have multiple records with

|

||||

the same ledger sequence but different hash. Looking up all of the transactions

|

||||

in a given ledger then requires querying the transaction_hashes table to get the hashes of

|

||||

all of the transactions in the ledger, and then using those hashes to query the

|

||||

transactions table. Sometimes we only want the hashes though.

|

||||

|

||||

## Ledger data

|

||||

|

||||

Ledger data is more complicated than transaction data. Objects have different versions,

|

||||

where applying transactions in a particular ledger changes an object with a given

|

||||

key. A basic example is an account root object: the balance changes with every

|

||||

transaction sent or received, though the key (object ID) for this object remains the same.

|

||||

|

||||

Ledger data then is modeled like so:

|

||||

CREATE TABLE clio.ledgers (

|

||||

sequence bigint PRIMARY KEY, # Sequence of the ledger version

|

||||

header blob # Data of the header

|

||||

) ...

|

||||

```

|

||||

This table stores the ledger header data of specific ledger versions by their sequence.

|

||||

|

||||

### `diff`

|

||||

```

|

||||

CREATE TABLE objects (

|

||||

id blob,

|

||||

ledger_sequence int,

|

||||

object blob,

|

||||

PRIMARY KEY(key,ledger_sequence))

|

||||

CREATE TABLE clio.diff (

|

||||

seq bigint, # Sequence of the ledger version

|

||||

key blob, # Hash of changes in the ledger version

|

||||

PRIMARY KEY (seq, key)

|

||||

) WITH CLUSTERING ORDER BY (key ASC) ...

|

||||

```

|

||||

This table stores the object index of all the changes in each ledger version.

|

||||

|

||||

### `account_tx`

|

||||

```

|

||||

CREATE TABLE clio.account_tx (

|

||||

account blob,

|

||||

seq_idx frozen<tuple<bigint, bigint>>, # Tuple of (ledger_index, transaction_index)

|

||||

hash blob, # Hash of the transaction

|

||||

PRIMARY KEY (account, seq_idx)

|

||||

) WITH CLUSTERING ORDER BY (seq_idx DESC) ...

|

||||

```

|

||||

This table stores the list of transactions affecting a given account. This includes transactions made by the account, as well as transactions received.

|

||||

|

||||

The `objects` table has a compound primary key. This is essential. Looking up

|

||||

a ledger object as of a given ledger then is just:

|

||||

|

||||

### `successor`

|

||||

```

|

||||

SELECT object FROM objects WHERE id = ? and ledger_sequence <= ?

|

||||

ORDER BY ledger_sequence DESC LIMIT 1;

|

||||

```

|

||||

This gives us the most recent ledger object written at or before a specified ledger.

|

||||

CREATE TABLE clio.successor (

|

||||

key blob, # Object index

|

||||

seq bigint, # The sequnce that this ledger object's predecessor and successor was updated

|

||||